Too many SQL projects just stop at SELECT * FROM cleaned_data and call it a day. I wanted to build a real SQL data warehouse — one that captures the actual lifecycle of data: from raw ingestion, through transformation, into a dimensional model ready for analytics.

SQL Data Warehouse

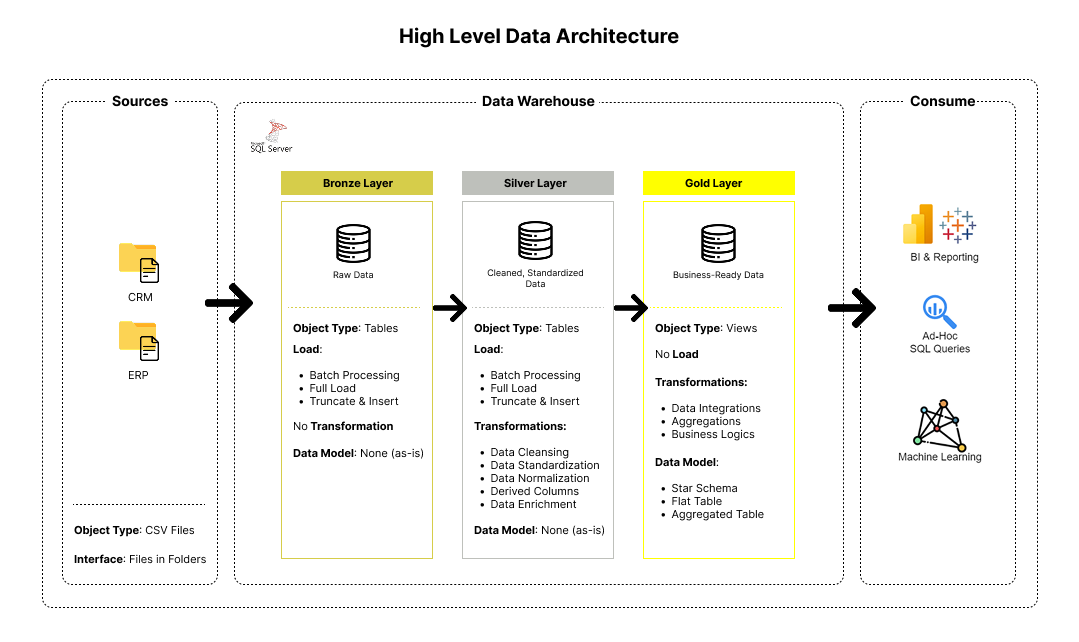

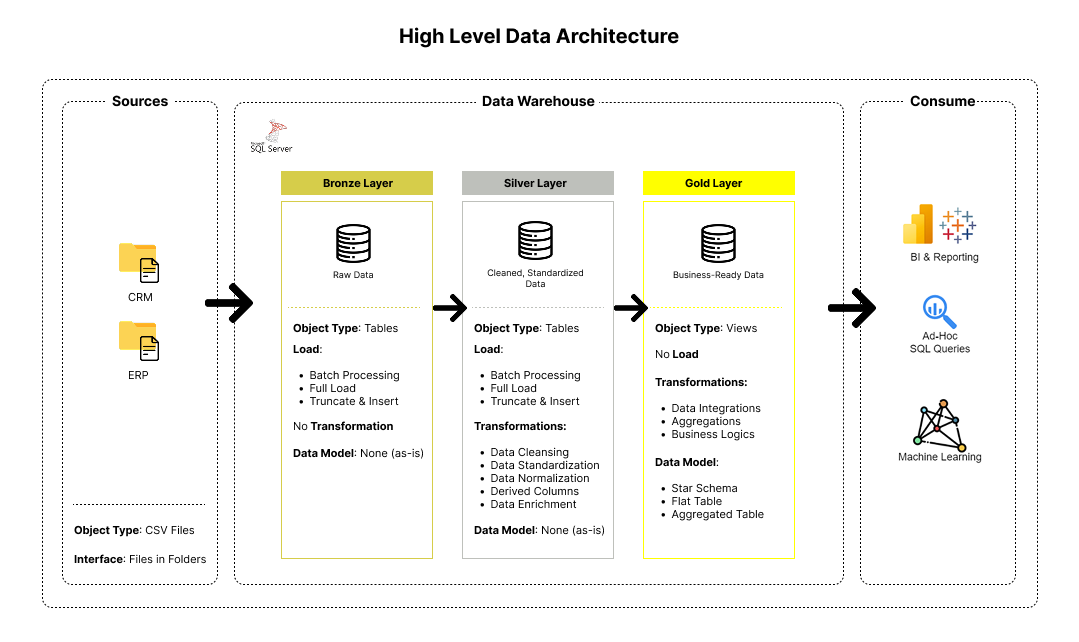

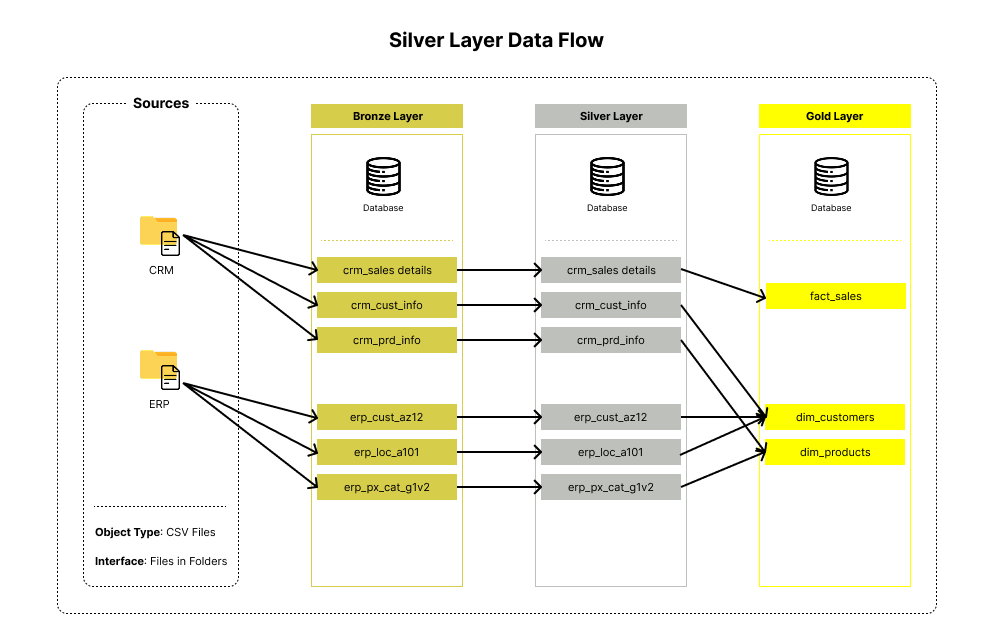

This project simulates the development of a modern, modular, and production-style data warehouse using SQL Server, structured around the Medallion Architecture (Bronze → Silver → Gold). It’s designed for engineers who want to understand how data moves, evolves, and ultimately drives decision-making.

Architecture Overview

The project follows the Medallion Architecture, breaking down the data journey into three logical layers:

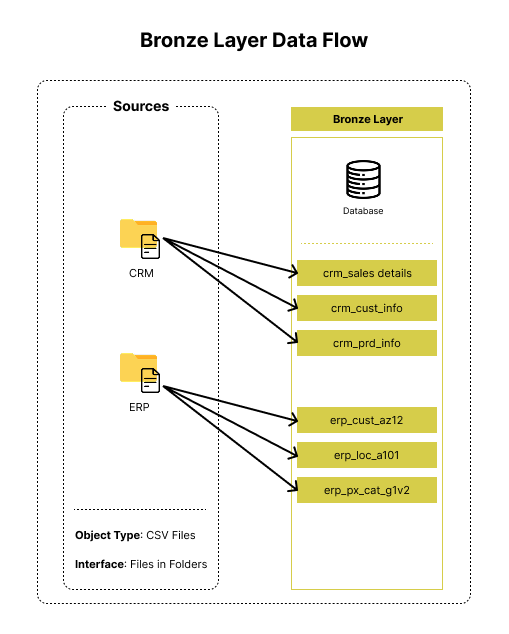

Bronze Layer – Raw Data

Raw CSV exports from ERP and CRM systems (simulated). These are ingested as-is into SQL Server, with no transformation or filtering. This layer acts as the immutable source of truth.

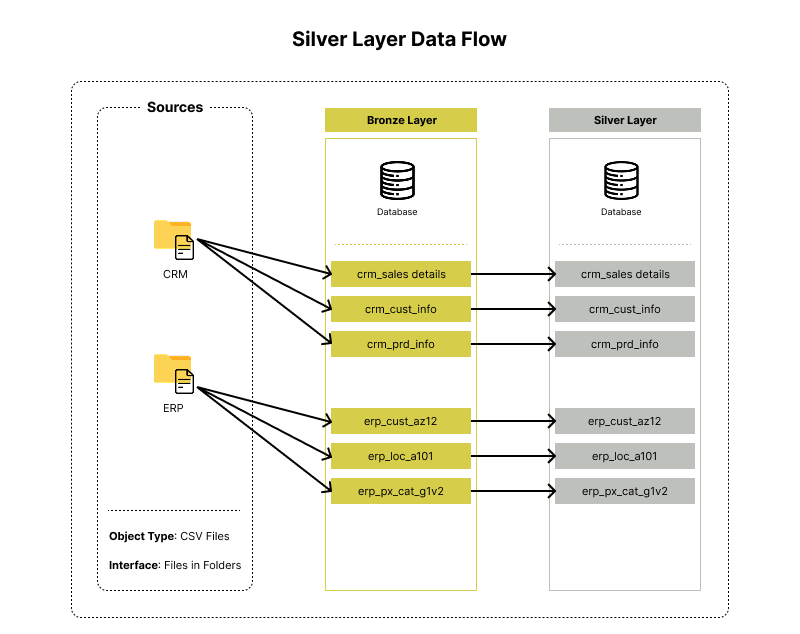

Silver Layer – Cleaned & Standardized

The real work begins here: data cleaning, deduplication, type harmonization, and joining begin. The goal: produce reliable and consistent data ready for modeling.

Gold Layer – Business-Focused Star Schema

Here’s where we implement dimensional modeling. Fact and dimension tables are built for analytical use cases like customer segmentation, sales trend analysis, and product performance.

Workflow

This isn’t just a folder with a few scripts — it’s a structured pipeline. Here’s how data moves through the system:

1. Ingestion (Bronze)

CSV files (datasets/) are loaded into SQL Server using simple bulk import techniques. No cleaning, no changes — just persistence.

📂 Example:

bronze/customers_bronze.sql

2. Transformation (Silver)

We apply SQL logic to:

- Remove nulls

- Resolve duplicates

- Normalize inconsistent formats

- Join ERP and CRM datasets where needed

📂 Example:

silver/customers_silver.sql

Each table in the Silver layer has a direct lineage back to a Bronze source — and can be rebuilt deterministically.

3. Modeling & Analytics (Gold)

This is where we implement a Star Schema:

- Fact Tables:

fact_sales - Dimension Tables:

dim_customer,dim_product,dim_date,dim_region

These are built for fast aggregation, flexible slicing, and compatibility with BI tools. It’s clean, powerful, and makes analysts happy.

📂 Example:

gold/fact_sales.sql

Analytics: Insights, Not Just Tables

Once the Gold layer is in place, it’s time to extract value. The project includes a set of business-ready queries to answer real questions:

- What are the top-selling products by region?

- Which customers are most engaged?

- How do seasonal trends impact revenue?

These aren’t toy queries — they’re structured to support dashboarding, reporting, and executive decisions.

📂 Bonus: Queries designed to integrate with Tableau or Power BI.

Data Quality Testing

You can’t have good data without good tests.

This project includes automated SQL tests that validate:

- Null constraints

- Primary/foreign key relationships

- Expected value ranges (e.g. revenue > 0)

- Duplicate prevention

📂 tests/quality_checks_silver.sql

📂 tests/quality_checks_gold.sql

Think of it like dbt tests — but hand-coded, so you understand how they work.

Tools Used

All tools used are free and accessible:

| Tool | Purpose |

|---|---|

| SQL Server | Core data warehouse engine |

| SSMS | Development IDE |

| Draw.io | Architecture & ER diagrams |

| Notion | Project documentation |

| CSV Datasets | Simulated ERP & CRM sources |

📁 All datasets are available in the datasets/ folder in the repo.

Project Structure

📦

├─ README.md

├─ assets

│ ├─ Bronze Layer Data Flow.png

│ ├─ Data Architecture.png

│ ├─ Gold Layer Data Flow.png

│ └─ Silver Layer Data Flow.png

├─ datasets

│ ├─ source_crm

│ │ ├─ cust_info.csv

│ │ ├─ prd_info.csv

│ │ └─ sales_details.csv

│ └─ source_erp

│ ├─ CUST_AZ12.csv

│ ├─ LOC_A101.csv

│ └─ PX_CAT_G1V2.csv

├─ docs

│ └─ data_catalog.md

├─ scripts

│ ├─ bronze

│ │ ├─ ddl_bronze.sql

│ │ └─ proc_load_bronze.sql

│ ├─ gold

│ │ └─ ddl_gold.sql

│ ├─ init_database.sql

│ └─ silver

│ ├─ ddl_silver.sql

│ └─ proc_load_silver.sql

└─ tests

├─ quality_checks_gold.sql

└─ quality_checks_silver.sql